#38. The Runway GEN-3 Dilemma: Cutting-Edge AI vs. User-Friendly Software

Weighing the Trade-offs in this Updated AI Video Editing Tool.

Hello all. I assume all is well. With the barrage of “Thought Leadership” that your inbox has probably endured as of late, I chose not add to it for a bit. So here we go.

Table of Contents

1. Can You Help Save a Rescue Pet?

2. Runway GEN-3 Alpha

3. LLM vs. LMM: What is the difference?

1. Can You Help Save a Rescue Pet?

My family rescues and adopts pets that that have been through a rough going. Their lives are often abbreviated and our hearts are always broken.

So, If you’ve got five bucks burning a hole in your pocket and can help '‘Gigi,” please check out the link below. We’re a “no kill family” so it gets expensive at times. Hit me at pmberry [at] mac [dot] com if you prefer to send directly to the vet. Some prefer to avoid the fees and deal directly.

Thanks for the read! Let’s move on to Runway GEN-3

Source: RunwayML

2. Runway GEN-3: An In-Depth Look at the AI Video Revolution That's More Evolution Than Revolution

The long-awaited Runway GEN-3 has finally (almost) arrived, and it's generating as much buzz as controversy. While it promises a new era of AI-powered video editing, the reality is a bit more nuanced. Let’s look at strengths, weaknesses, and the implications for the future of video creation.

It Is Not Done

With all of the barking coming out of this place these past months, I’d bet 20 bucks they’re feeling the heat to get something on the street that anyone (who is paying that is) can get their hands on. Pay for something sold as “Alpha” seems a bit cheesy - but at least it is not vaporware. Sounds like some knucklehead from marketing crashed a strategy sesh and got in someone’s ear. So no paying subscription = GEN-2.

Limiting Alpha to paying customers is their right. In addition to driving more subs, it keeps the load demand in-check which is helpful with buggy software. If you self-claim you software as “Alpha,” expect bugs.

At least they are consistent in their hubris if nothing else. I asked for a limited login to review GEN-3 a couple of months ago and was referred to an obscure Twitter account with some their latest/greatest slo-mo video clips done by a fan with access. It Looked great circa Fall 2023. I was required to purchase an enterprise license for a test log-in.

Let’s get this over with.

Runway GEN-3: The Good, Bad, & Ugly!

The Good: Impressive AI Capabilities and Streamlined Workflow

A. Text-to-video generation

Let's start with the positives. Runway GEN-3 showcases some strong AI capabilities. The text-to-video generation, while not parfait, is a significant step forward. It can produce short, stylized clips based on text prompts, opening up new creative possibilities. The results are far from polished, often resembling abstract art rather than cinematic masterpieces, but the potential is undeniable.

B. Image-to-image generation

The image-to-image generation is good. It allows users to modify existing footage by describing desired changes in text. Need to change the weather in a scene? Simply type "make it sunny" and watch the AI work its magic. It's not always flawless, but the ability to manipulate video with natural language is groundbreaking. If this is ever perfected, it will have my undivided attention.

C. Inpainting and outpainting

Inpainting and outpainting, which allow for seamless content removal and extension, are equally impressive. These features are surprisingly effective, particularly for simple tasks like removing unwanted objects or extending backgrounds. For more complex edits, the results can be hit-or-miss, but the technology shows great promise.

D. Redesigned interface that streamlines the video editing

GEN-3 also introduces a redesigned interface that streamlines the video editing workflow. The intuitive layout and simplified controls make it easier for both beginners and experienced editors to navigate the software. The integration of AI features into the editing process is seamless, allowing users to experiment and iterate quickly.

The Bad: Subscription Model, Technical Issues, and Limitations

E. New subscription model

Unfortunately, GEN-3 isn't without its flaws. The most contentious issue is the new subscription model. Runway has abandoned its free tier, forcing users to pay a monthly fee to access even the most basic features. This has disappointed loyal users who feel betrayed by the sudden shift. While a free trial is offered, it's clear that Runway is betting on users becoming reliant on the software before the trial ends.

F. Technical issues (after all, it’s Alpha)

Another major concern is the plethora of technical issues. The software is prone to crashes, particularly when dealing with complex projects or high-resolution footage. Rendering times can be excruciatingly slow, even on powerful machines.

G. Text-to video

Furthermore, the AI capabilities, while impressive, are not without limitations. The text-to-video generation often produces results that are more artistic than realistic. The image-to-image generation can struggle with complex modifications and fine details. Inpainting and outpainting, while effective for simple tasks, often fail to produce convincing results for more intricate edits.

The Ugly: Unresponsive Customer Support and Lack of Transparency

H. Hubris

As stated (or implied), the person I dealt with at Runway busy selling enterprise subscriptions was a bit of a d--k. One of the most disappointing aspects of GEN-3 is the lackluster customer support. Users have reported long response times, unhelpful generic troubleshooting tips, and a general lack of transparency from Runway regarding the software's limitations and future development plans. This leaves users feeling frustrated and unsupported, particularly given the subscription model's financial commitment.

The Verdict: A Promising Step Forward, But Not Without Its Stumbles

I. Step Forward

Runway GEN-3 represents a significant step forward in AI-powered video editing. Its innovative features open up new creative possibilities and streamline workflows. However, it's not without its flaws. The subscription model, technical issues, and limitations of the AI capabilities are major drawbacks.

J. $$$

Whether GEN-3 is worth the investment depends on your individual needs, expectations, and if your employer is your Sugar Daddy.

If you're looking for cutting-edge AI tools to experiment with and are willing to tolerate some technical hiccups, then GEN-3 might be worth considering. However, if you're looking for a polished, reliable video editing tool with robust customer support, you might want to look elsewhere.

Runway GEN-3 is a glimpse into the future of video creation. AI is poised to revolutionize the way we edit and produce video. While GEN-3 may not be the perfect embodiment of that revolution, it's a significant step in the right direction.

Go ahead and buy it. You know you want to. Hopefully you won’t need to deal with a human.

3. LLMs vs. LMMs: Are These Typos?

In the ever-evolving landscape of artificial intelligence (AI), Large Language Models (LLMs) and Large Multimodal Models (LMMs) have emerged as powerful tools with distinct capabilities. Both types of models leverage vast amounts of data to perform tasks, but they differ significantly in their inputs, outputs, and applications. This article aims to shed light on the key differences between LLMs and LMMs, providing a comprehensive overview of their unique features and highlighting popular examples of each.

Large Language Models (LLMs)

LLMs are AI models specifically designed to process and generate text. They are trained on massive text datasets, encompassing books, articles, code, and more. This extensive training enables LLMs to understand the nuances of human language, including grammar, syntax, and semantics. Consequently, LLMs excel at various text-based tasks, such as:

Text generation: LLMs can produce coherent and contextually relevant text, including articles, poems, code snippets, and even creative writing pieces.

Translation: LLMs can accurately translate text from one language to another, bridging communication gaps across different cultures.

Summarization: LLMs can condense lengthy documents into concise summaries, extracting key information and saving readers time.

Question answering: LLMs can comprehend complex questions and provide informative answers based on their vast knowledge base.

Chatbots and virtual assistants: LLMs can power conversational AI agents, enabling natural and engaging interactions with users.

Popular examples of LLMs include:

OpenAI's GPT (Generative Pre-trained Transformer) series: The GPT models, including GPT-3, GPT-4.o, and its variants, have garnered significant attention for their impressive text generation capabilities and have found applications in various domains.

Google's LaMDA (Language Model for Dialogue Applications): LaMDA is a conversational AI model designed to engage in open-ended conversations with users, demonstrating a deep understanding of language and context.

Hugging Face's Transformers library: This open-source library provides a wide range of pre-trained LLM models, making it accessible to developers and researchers for various NLP tasks.

Large Multimodal Models (LMMs)

LMMs, on the other hand, go beyond text processing and are capable of understanding and generating multiple types of data, including images, audio, video, and even 3D models. They are trained on diverse datasets that combine different modalities, enabling them to establish connections between various forms of information. This multimodal capability opens up a wide array of possibilities, such as:

Image captioning: LMMs can generate descriptive captions for images, aiding visually impaired individuals or enhancing image search functionalities.

Video summarization: LMMs can analyze videos and create concise summaries, highlighting key events and saving viewers time.

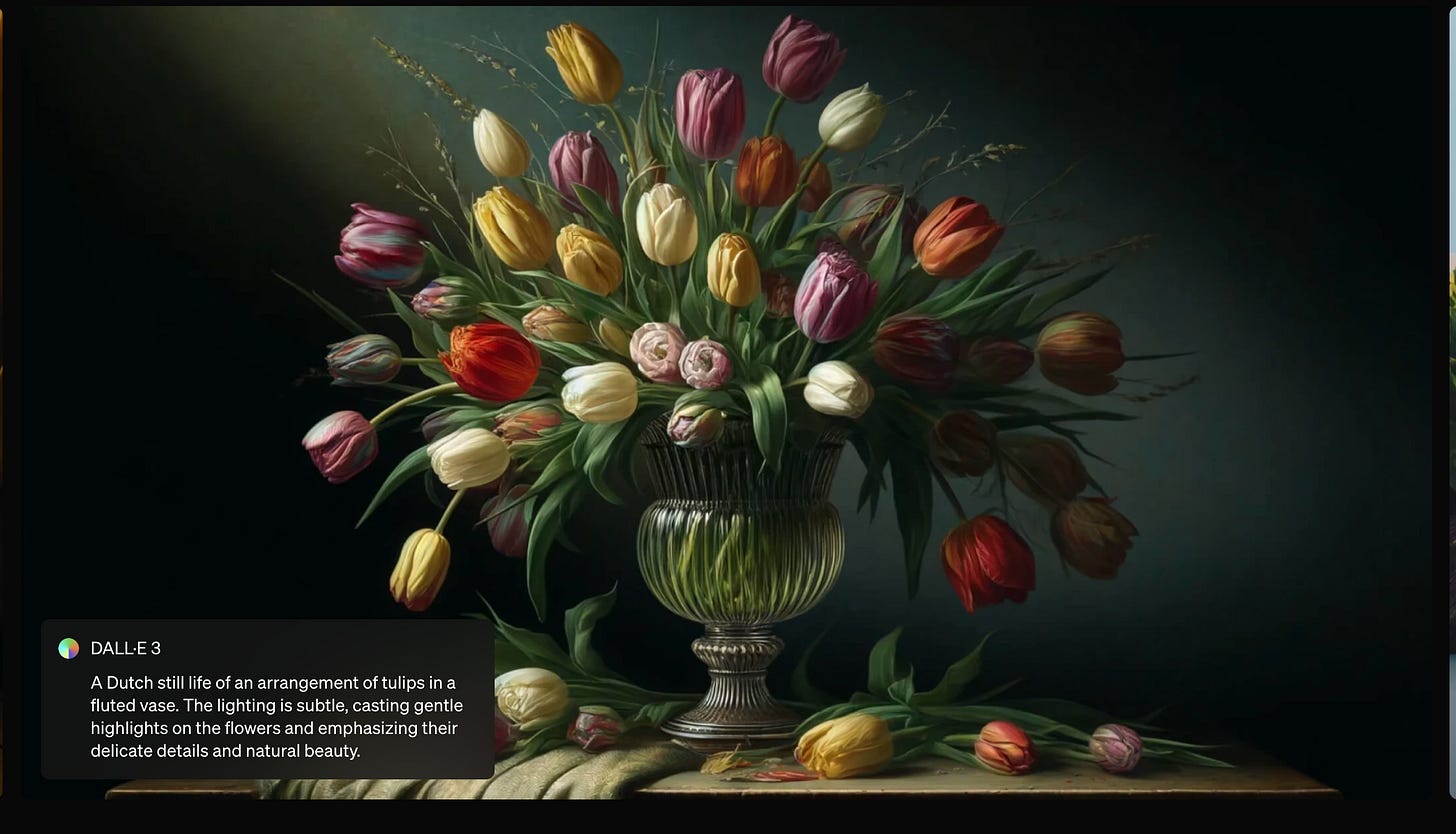

Text-to-image generation: LMMs can generate images based on textual descriptions, enabling creative applications like art generation and design.

Audio transcription and translation: LMMs can convert spoken language into text and translate it into different languages, facilitating communication across linguistic barriers.

Robotics and autonomous systems: LMMs can equip robots with the ability to perceive and interact with their environment through multiple senses, enabling them to perform complex tasks.

Popular examples of LMMs include:

OpenAI's DALL-E and CLIP: DALL-E generates images from textual descriptions, while CLIP measures the similarity between text and images, enabling various applications in image search and retrieval.

Google's MUM (Multitask Unified Model): MUM is a multimodal model designed to understand information across different formats, including text, images, and videos, and provide comprehensive answers to complex queries.

DeepMind's Perceiver: Perceiver is a versatile LMM architecture capable of processing various data modalities in a unified manner, demonstrating potential in diverse applications.

Summary

In conclusion, LLMs and LMMs represent two distinct branches of AI development, each with unique strengths and applications. LLMs excel at text-based tasks, while LMMs expand their capabilities to encompass multiple data modalities. As research in this field progresses, we can anticipate further advancements in both types of models, leading to new and innovative applications across various domains.

For Example: Runway GEN-3

Key points that confirm Runway GEN-3 as an LMM:

Multimodal Training: GEN-3 was trained on a large dataset of both images and videos, allowing it to understand and generate complex visual content.

Text-to-Video Generation: Its ability to create videos from textual descriptions showcases its capability to bridge the gap between language and visual representation.

Image-to-Video and Video-to-Video Manipulation: GEN-3 can also modify existing videos or create new ones based on input images, further demonstrating its multimodal nature.

Overall, Runway GEN-3's focus on video generation and manipulation using various input modalities aligns it more with the characteristics of LMMs than LLMs.

Feel free to share comments below. If you enjoyed this read and you’re not lame, please smash the sh-t out of the heart icon …

…at the top or bottom of the page so others can find this article. Re-stacking is great too (that’s the green thingie on the right).

Thanks for reading The Ad Stack! Subscribe for free to receive new posts and support my work.